Using 152Ki, which exceeds its request of 0.įailedScheduling 8m45s default-scheduler 0/1 nodes areĪvailable: 1 node(s) had untolerated taint. Redis was using 32Ki, which exceeds its request of 0. The node was low on resource: ephemeral-storage.Ĭontainer awx-task was using 3427520Ki, which exceeds its request of 0.Ĭontainer awx-ee was using 4425468Ki, which exceeds its request of 0.

THE NODE WAS LOW ON RESOURCE EPHEMERAL STORAGE FULL

Important When choosing a confidential VM with full OS disk encryption before VM deployment that uses a customer-managed key (CMK). Local SSDs provide higher throughput and lower latency than standard.

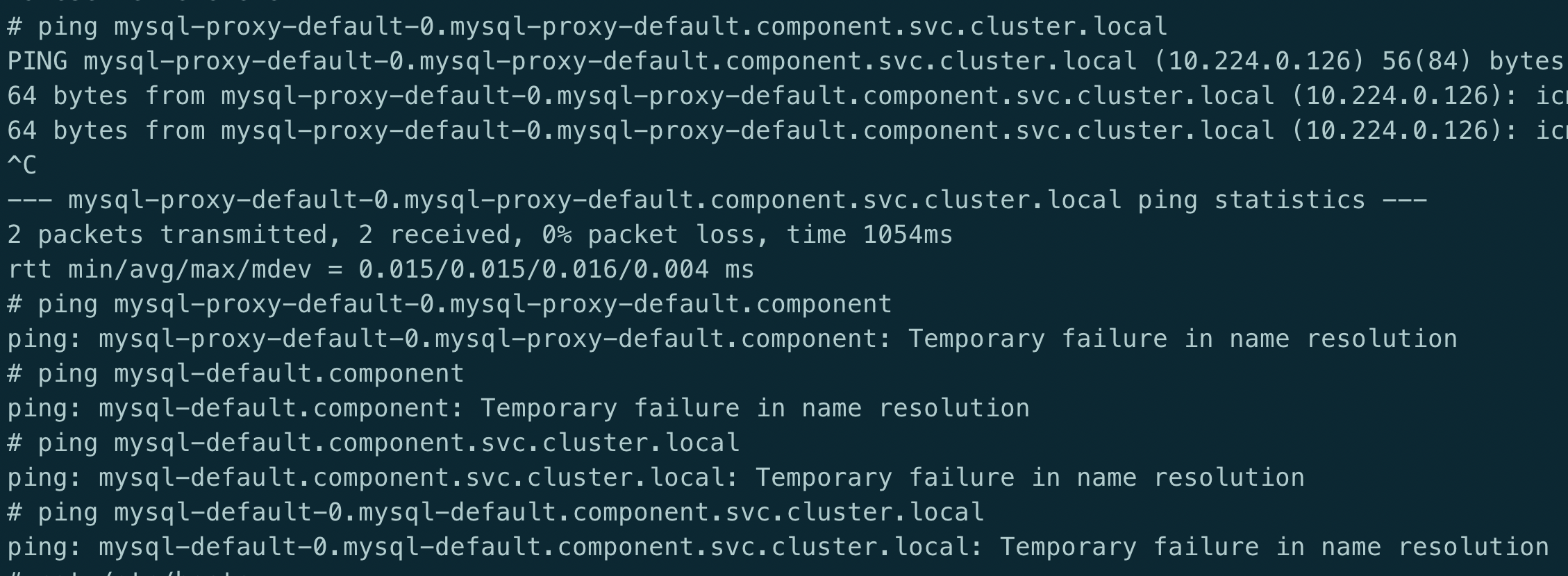

This partition can be shared between user pods, the OS, and Kubernetes system daemons. Root This partition holds the kubelet root directory, /var/lib/kubelet/ by default, and /var/log/ directory. There are two basic ways of creating the primary partition: root and runtime. Container wait was using 60Ki, which exceeds its. To resolve this issue, you must dive into your pod, and check when the process running which device location cost your available storage by command df-h, and observe the available capacity size. Were seeing some workflow pod failures with this error: The node was low on resource: ephemeral-storage.

The node was low on resource: ephemeral-storage. Ephemeral local storage is always made available in the primary partition. This issue happend is due to of lacking of temporary storage while processing such as application process their jobs and store temporary, cache data. Now when I m trying to run jobs which uses docker (building images) the pods are being evicted with the following error. Container kube-proxy was using 12Ki, which exceeds its request of 0 df -h80. (gauge), Ephemeral storage limit of the container (requires kubernetes v1.8+).

Here is the default hard limits in k8s codes. I have my jenkins slave configured as a kubernetes cluster nodes. The node was low on resource: ephemeral-storage. It means available disk space on one of the worker node was less than 10% To fix this issue, I created another worker node with larger disk capacity.

$ kubectl describe pod nginx-7cf887bf7d-ch7mn Name: nginx-7cf887bf7d-ch7mn Namespace: default Priority: 0 Priorit圜lassName: Node: ip-100–64–5-… Start Time: Tue, 13:14:16 +0300 Labels: app=nginx pod-template-hash=7cf887bf7d Annotations: Status: Failed Reason: Evicted Message: Pod The node was low on resource. amount of compute resource requests of all pods placed in a node does not. To enable only client or server logs, use DEBUGnode-ssdp:client or. When I describe the pod, I saw the Pod The node was low on resource: message. All the pods of a node are on Evicted state due to 'The node was low on resource: ephemeral-storage. The amount of CPU, memory, and ephemeral storage a container needs can be. Today, some of my Pods was in Evicted status in the dev environment nginx-7cf887bf7d-kmgl9 0/1 Evicted 0 5h29m nginx-7cf887bf7d-kszwn 0/1 Evicted 0 5h29m nginx-7cf887bf7d-m9cvg 0/1 Evicted 0 5h29m nginx-7cf887bf7d-sps6q 0/1 Evicted 0 5h29m

0 kommentar(er)

0 kommentar(er)